|

Graham writes ...

In case you were wondering what happened to the Artemis 1 mission …? Hurricane Ian put paid to the launch attempts and the SLS had to ‘run’ for cover back to the Vertical Assembly Building. The date of the next launch attempt is uncertain at the time of writing, but it is hoped that it may be in November 2022. Graham Swinerd Southampton, UK October 2022

0 Comments

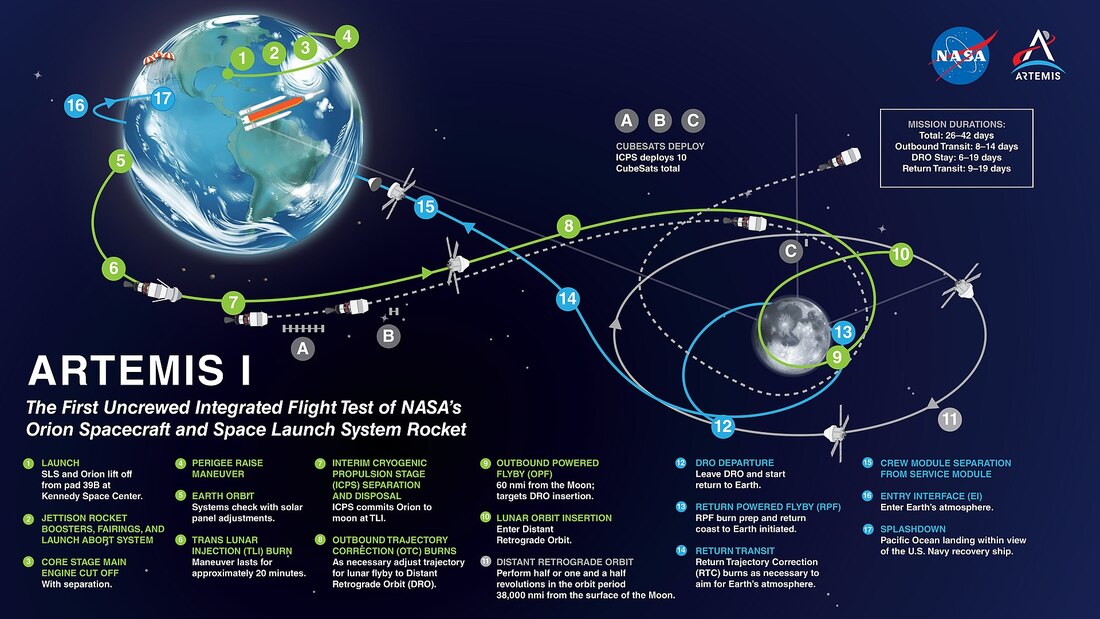

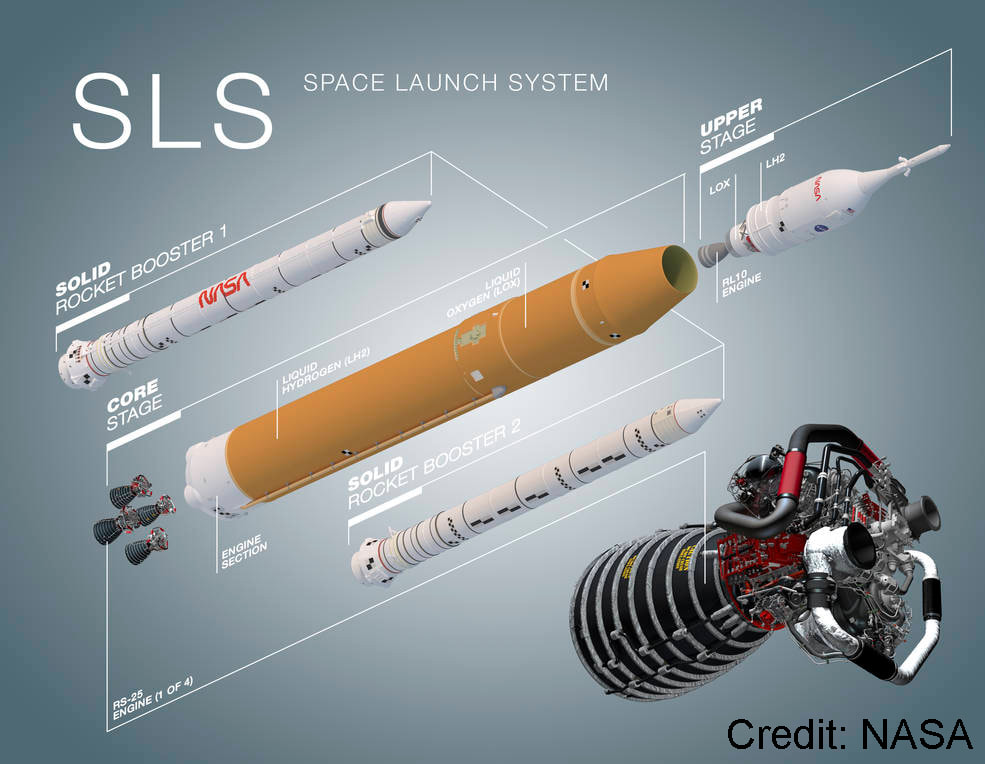

The asteroid Dimorphos just moments before impact. The asteroid Dimorphos just moments before impact. Graham writes ... The DART spacecraft successfully impacted the asteroid Dimorphos in the early hours of this morning (UK time: 27 Sept), and I thought you might want to see what happened! Please click here to see a video courtesy of BBC News showing the moments just before impact. We will learn in the coming days whether the experiment was successful in changing Dimorphos's orbital speed, and consequently its orbit around Didymos. Graham Swinerd Southampton, UK September 2022 Graham writes … Alongside the impact event of the DART mission (see next blog post below), the other big happening this month is the proposed launch of the Artemis 1 mission – the first uncrewed test of the systems that are intended to return astronauts to the moon. The objectives of the Artemis programme are to establish a permanent crewed base on the moon, and to enable and test the necessary systems required for future missions beyond the moon. After the lack of ethnic diversity of the Apollo moon-walking astronauts, another unofficial aim is to take women and Black astronauts (and indeed Black women astronauts) to the lunar surface. NASA have already identified its ‘Artemis Team’ of 18 American candidates, and the involvement of other space agencies – ESA (European Space Agency), JAXA (Japan Aerospace Exploration Agency) and the CSA (Canadian Space Agency) – ensures a mix of other nationalities in future landing crews. The Artemis programme will also be supported by other initiatives, in particular the Lunar Gateway, which is a small, lunar-orbiting space station. This is expected to be in place by about 2027, and is intended to operate as a solar-powered communications node, a science laboratory and a short-term habitation module for astronauts. Unfortunately, the efforts to launch the Artemis 1 mission have not been successful so far. The first attempt took place on 29 August, but was abandoned because the temperature of one of the four main engines was indicated to be above the maximum allowable for launch. A second attempt, too, was aborted on 3 September due to a service arm fuel supply line leak. I have to admit that I tuned in on both occasions to NASA’s excellent live stream HD TV coverage with great excitement and anticipation. At my ‘great age’, I feel very impatient to see space exploration programmes up and running again – I just want them to get on with it! The next launch opportunity is 27 September, with a back-up on 2 October, and I shall be tuning again to the live coverage.  The Space Launch System (SLS) Artemis 1 waits on the launch pad, prior to the recent launch attempts. The Space Launch System (SLS) Artemis 1 waits on the launch pad, prior to the recent launch attempts. The Artemis 1 mission will be the first outing of the Orion spacecraft, which is planned to be of 38 days duration. Looking at the spacecraft configuration, at first sight it looks very much like the Apollo Command and Service modules, with the obvious difference being that Orion has deployable solar arrays for power generation. The power system on Apollo used fuel cells, which are effectively chemical engines that need an input of hydrogen and oxygen to produce electricity and water. This change facilitates the need for an additional water tank to supply the crew’s needs. However, the most significant difference is that the cone-shaped Orion crew module is significantly larger than the equivalent Apollo module, with about 30% more interior volume. Consequently, Orion missions will accommodate four crew members for a typical mission duration of about 21 days. Another significant difference is that the Orion Service module is European in design and manufacture. This cylindrically-shaped module is based upon ESA’s Automated Transfer Vehicle (ATV) (1) and provides the necessary services, such as power, propulsion, communications and life support & environmental control, required to keep the crew alive and to ensure a successful mission. The Orion system’s main engine, located at the rear end of the Service module, is a souped-up version of the Space Shuttle’s orbital maneuvering system engine, with a thrust of 33 kN. Mention of this prompts memory of a very unlikely encounter a while ago with a NASA engineer who worked on the development of this Shuttle propulsion technology for the Orion spacecraft. In 2011, my wife and I had a lovely holiday break walking the coastal path of Pembrokeshire, South Wales, and on this particular day I was wearing my NASA baseball cap. Coming the other way was a couple, and the gentleman was wearing a similar cap. We started to converse, and found that we shared a professional interest in space technology. He told me about his work on the Orion programme, and I told him about the upcoming, and ground-breaking ESA comet lander mission, called Rosetta, which he’d known nothing about. A remarkable meeting, in a beautiful place in lovely weather, which would not have happened if I hadn’t been wearing my NASA cap to protect me from the sun!  Anyway, back to Artemis. One thing that surprised me about the new Space Launch System (SLS) was that NASA has returned to the Saturn V philosophy used on the Apollo programme launches. This is along the lines of stacking everything– the crew and service modules, plus the lunar lander module – all on one rocket. The reason for my surprised reaction is the intention to put people on top of such a large vehicle. The energy contained within its chemical propellants is equivalent to that of a small atom bomb. Despite the existence of a dedicated launch escape system, this seems to me to be an unnecessary risk. The Agency got away with it on the 13 Saturn V launches during the Apollo era, but why take the risk now? There is in fact a better – safer and more flexible – way of doing this, which was proposed during the Constellation ‘return to the moon’ programme in around 2004. At that time, the imminent retirement of the Space Shuttle in 2011 dictated a rethink of the American space programme by the then-Bush administration. There had been a realization – a hard lesson learned – that complex spacecraft like the space shuttle are dangerous. Seven flight crew were killed on launch in the Challenger accident in 1986, and another seven died when the shuttle Columbia broke up on reentry in 2003. In light of this, a new approach to launching people was recommended in the Constellation programme. In this proposal, to launch the crewed Orion spacecraft a new man-rated launch vehicle was developed called Ares 1 using existing components derived from Shuttle and Apollo hardware. This new vehicle was small and very simple, with a genuine crew escape system, with the result that it would be very reliable and safer for future crew launches to Earth orbit and for lunar missions. The hardware for the mission, for example the lunar lander, equipment required for developing or supplying a moon base and a propulsion stage, would be launched separately on an uncrewed, heavy-lift launch vehicle, called Ares 5. Subsequently, the crewed vehicle and the mission payload vehicle would rendezvous in orbit, before departure for the moon. Although the Ares 1 and 5 launch vehicles were to be developed primarily for lunar missions, NASA envisaged a wider role for them involving crewed space missions to destinations other than the moon. A test flight of Ares 1 was performed, designated Ares 1-X, but nevertheless the Constellation programme was cancelled by the incoming Obama administration in 2010. With the retirement of the Shuttle in 2011, this left the US Space programme in the remarkable position of being principal operator of the International Space Station, but without any means for US astronauts to reach it in Earth orbit, other than by ‘hitching a ride’ on Russian crewed vehicles. For more detail about the Constellation programme, please see (2). You might ask, why am I discussing return-to-the-moon space programmes in a blog to do with science and faith? Well firstly, with my physicist’s hat on, the Artemis launch is a truly major event in the realm of the physical sciences. But then secondly, and perhaps less obviously, the spiritual or religious experiences of astronauts are worth reflecting upon. And this is not limited to moon-walking astronauts, but can be extended to those who have spent considerable time in Earth-orbiting space stations.

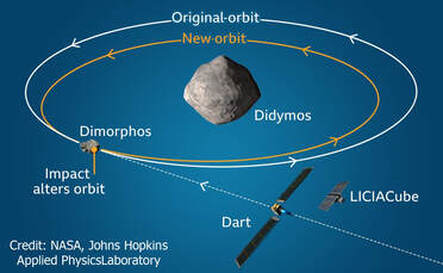

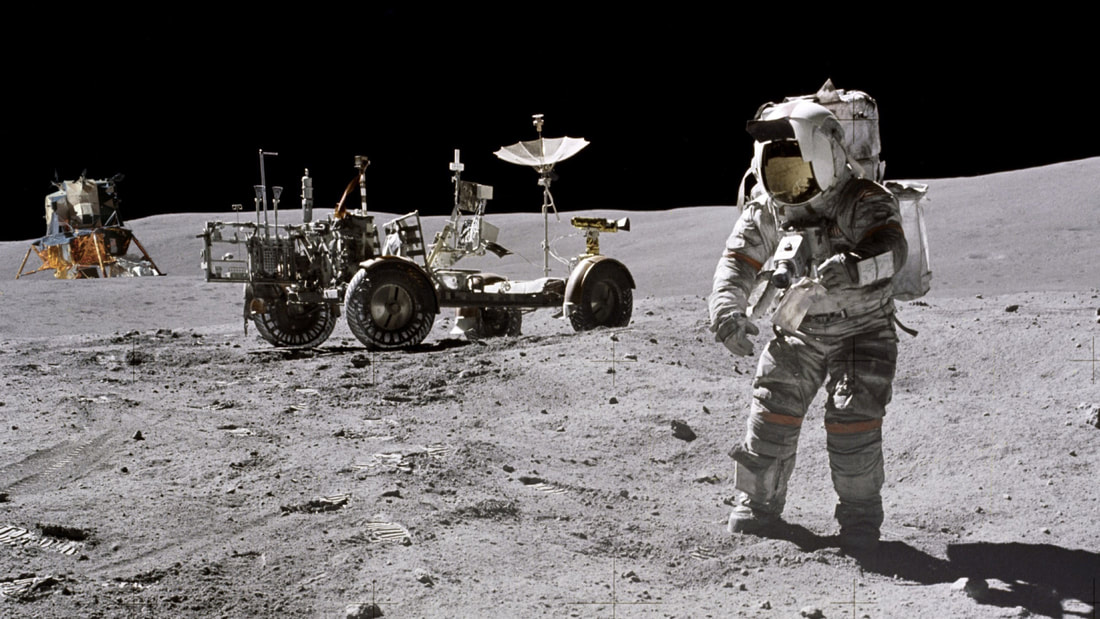

It is no secret that several of the Apollo astronauts were practicing Christians. Perhaps the most overt evidence of this is the remarkable occasion when the three astronauts aboard the Apollo 8 lunar-orbiting mission, Frank Borman, Jim Lovell and William Anders, read the first 10 verses of the first chapter of Genesis on Christmas Eve in 1968 (3). Then, on the occasion of the first historic landing mission Apollo 11, shortly before the lunar surface walk lunar module pilot Buzz Aldrin addressed the people of Earth: “I would like to request a few moments of silence … and to invite each person listening in, wherever and whomever they may be, to pause for a moment and contemplate the events of the past few hours, and to give thanks in his or her own way”. He then celebrated communion (the taking of bread and wine in remembrance of the sacrifice of Jesus Christ). Also, the commander of the final landing mission Apollo 17, Eugene Cernan, made no bones about his faith and was awed by what he believed was God’s amazing creation while on the lunar surface. He later commented: “There is too much purpose, too much logic [in what we see about us]. It was too beautiful to happen by accident. There has to be somebody bigger than you, and bigger than me …” (4). Other moon-walking astronauts related spiritual experiences induced by their journey to the lunar surface. Notably Apollo 14 astronaut Edgar Mitchell commented that “something happens to you out there”, and Apollo 15 astronaut Jim Irwin quoted the Bible during his lunar walk (5), and felt “touched by grace”. I guess it’s not too surprising that devout spacefarers would relate how their lunar mission influenced and strengthened their faith. Perhaps what is more remarkable is that many experienced a spiritual dimension in their lunar visit, simply by virtue of the ‘cosmic awe’ they encountered. It would seem that space in some way connects us to the divine. Graham Swinerd Southampton, UK September 2022 (1) The ATV was used for supply missions to the International Space Station up to 2015. (2) Graham Swinerd, How Spacecraft Fly: Spaceflight without Formulae, Springer, 2008, pp. 222-225. (3) Graham Swinerd & John Bryant, From the Big Bang to Biology: where is God? KDP publishing, 2020, p. 45. (4) Ibid., p 208. (5) Holy Bible (NIV) Psalm 121:1 “I lift my eyes to the mountains – where does my help come from?” Graham writes … This blog post is a very brief heads-up about the upcoming impact event, which is the centre piece of NASA’s DART (Double Asteroid Redirection Test) mission. This will occur, as planned, on 26 September. For more detail about the mission, please refer to the December 2021 blog post, which includes a video discussion between John and myself.  Analysis of the impact dynamics will be acquired by examination of the changes in Dimorphos's orbit. Analysis of the impact dynamics will be acquired by examination of the changes in Dimorphos's orbit. Essentially, this is the first spacecraft mission devoted to planetary defense science. In particular, the objective is to study the effectiveness of the kinetic impactor technique in changing the orbit of an asteroid to prevent a future, devastating asteroid collision with our home planet. Although such collisions with a large asteroid (greater than, say, 1 kilometre in diameter) are very rare, nevertheless we know that such events are inevitable in the long term. For this test, the target asteroid is a 160 metre diameter object called Dimorphos, which itself is in orbit around a larger asteroid (780 metres across) called Didymos. It’s worth pointing out that neither object poses an impact threat to the Earth! The idea is that the spacecraft will impact Dimorphos, causing a tiny change in its orbital speed. Although this change is very difficult to measure directly, the magnitude of the change can be calibrated very precisely by observing long-term changes in Dimorphos’s orbit around Didymos. In particular, the resulting cumulative change in its orbit period over many orbit revolutions can be observed subsequently using Earth-based telescopes. Watch out for media coverage of this historic event in the coming days, and for information about what DART tells us about planetary defense in slower time. Graham Swinerd Southampton, UK September 2022 John writes ...  Credit: John Bryant Credit: John Bryant In June’s post on this blog, I wrote about the CO2 emissions arising from production of different types of food; I also looked at the amount of land needed to produce the same range of foods. The obvious conclusion to draw from these data is that, both nationally and globally, we need to reduce the consumption of meat and meat-based foods in favour of plant-based foods. A similar conclusion was made in the 2020 report published by the UK Government’s Food Adviser, Henry Dimbleby (1). I need to emphasise that neither Dimbleby nor I are saying that everyone must be vegetarian or vegan; that is a matter of personal choice (we also note that some types of land are not suitable for crop production). However, we are emphasising that for the sake of the climate (and biodiversity) and in order to feed a growing global population, total meat consumption needs to be drastically reduced and plant-based food consumption needs to be increased. From a Christian perspective we can say that this shift is an aspect of both good stewardship and care for our neighbour.  Credit: John Bryant Credit: John Bryant This imperative to increase the consumption of plant-based foods also has implications for the plant science research community. Can we improve productivity, increase the efficiency of land use while at the same time reducing the need for application of fertilisers and pesticides? Understanding how plant genes work at the most fundamental level was the main area of my own research for many years. The combination of this level of research with studies of plant growth and crop yield will be vital if plant-based agriculture is to meet the challenges of the 21st century and beyond, as I recently discussed with my friend and colleague, Professor Ros Gleadow. In addition to being a Professor at Monash University, Melbourne, Ros is President of the Global Plant Council, an organisation dedicated to promotion of plant science education and research and to social and global justice in the applications of developments in the science. Earlier this summer she came to Europe to attend some conferences and meet other plant scientists. She spent two days in Exeter during which we were able to have extensive discussions about plant science research in general and our own interests in particular.  Credit: Ros Gleadow Credit: Ros Gleadow During her visit we spent a few hours in the Department of Biosciences at Exeter University, talking to some of the plant molecular biologists about their research. Amongst the several beautiful (and I use the word deliberately) projects, I want to mention just two. Firstly, many years of focussed research have revealed a network of responses at genetic level to the main stresses that climate change impose on plants, namely heat and drought. This will facilitate the breeding of crops (probably using GM and genome editing techniques) that are more resilient to climate change. Secondly, plants that apparently cope well with increased temperatures may be less able to cope with other stresses such as attacks by bacteria, fungi or viruses. It is a complex picture that will need more work to understand. More recently I have been able to continue my conversation with Ros, now back in Melbourne, courtesy of Zoom. There is a video that accompanies this blog post, which you can see by clicking here.  We talk about the work of the Global Plant Council, about the need for fairness and equality in all aspects of plant science and its applications and about priorities in plant science research. One very recent development that excites both of us is the use of GM technology to increase the efficiency of the mechanisms that plants (in this case, soya bean plants) use to capture the energy from sunlight. This led to a very marked increase in the rate of photosynthesis. It may be hard for non-biologists to appreciate how amazing this is. Because photosynthesis traps and uses energy from the sun, it is the ‘engine’ that drives all life on Earth. For an individual plant, increased rates of photosynthesis imply greater growth rates and greater productivity which is exactly what the international team of researchers found. The importance of this work was recognised by the media. In the UK, ‘serious’ newspapers (2) ran articles on it and it also featured on the BBC’s website (3). The excitement is certainly justified. If the technology can be used with other crops and especially with the major cereals, it will be a real ‘game-changer’ for global food production. As the research team leader, Professor Stephen Long, University of Illinois stated ‘This result is really relevant right now, [when] one out of 10 people on the planet are starving’. So, watch this space: we will report significant developments here. John Bryant

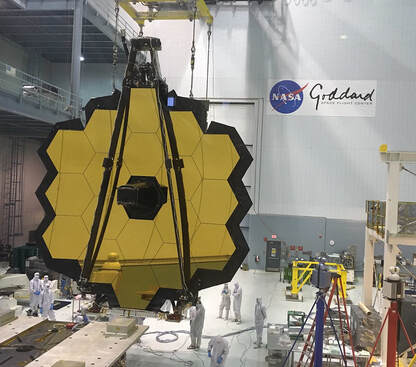

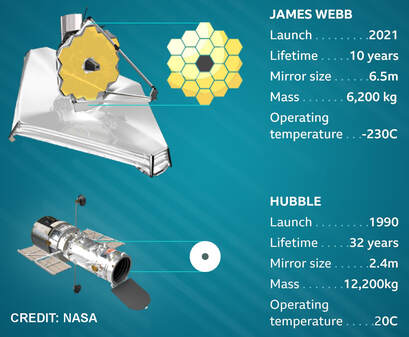

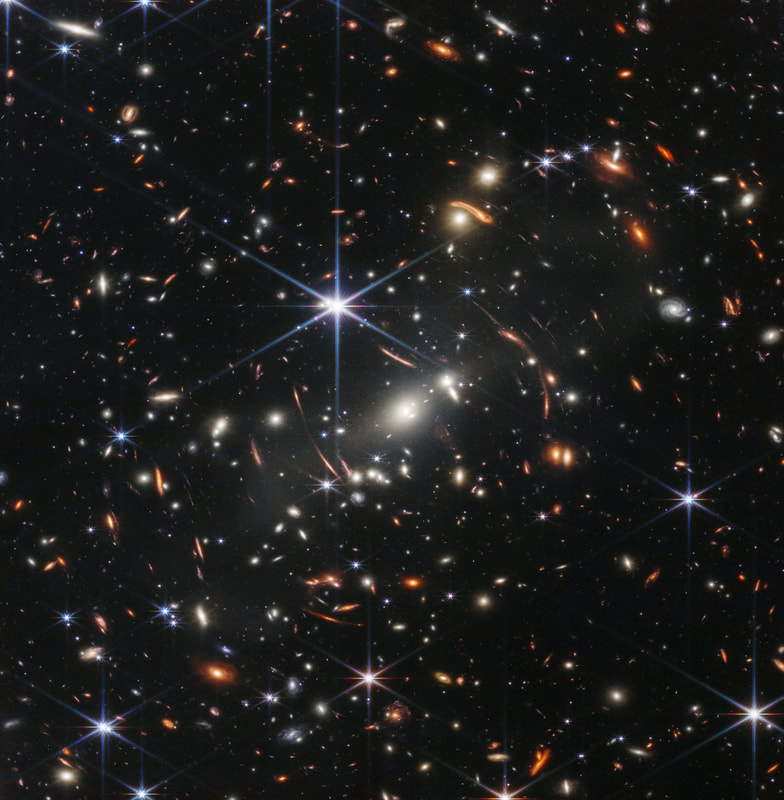

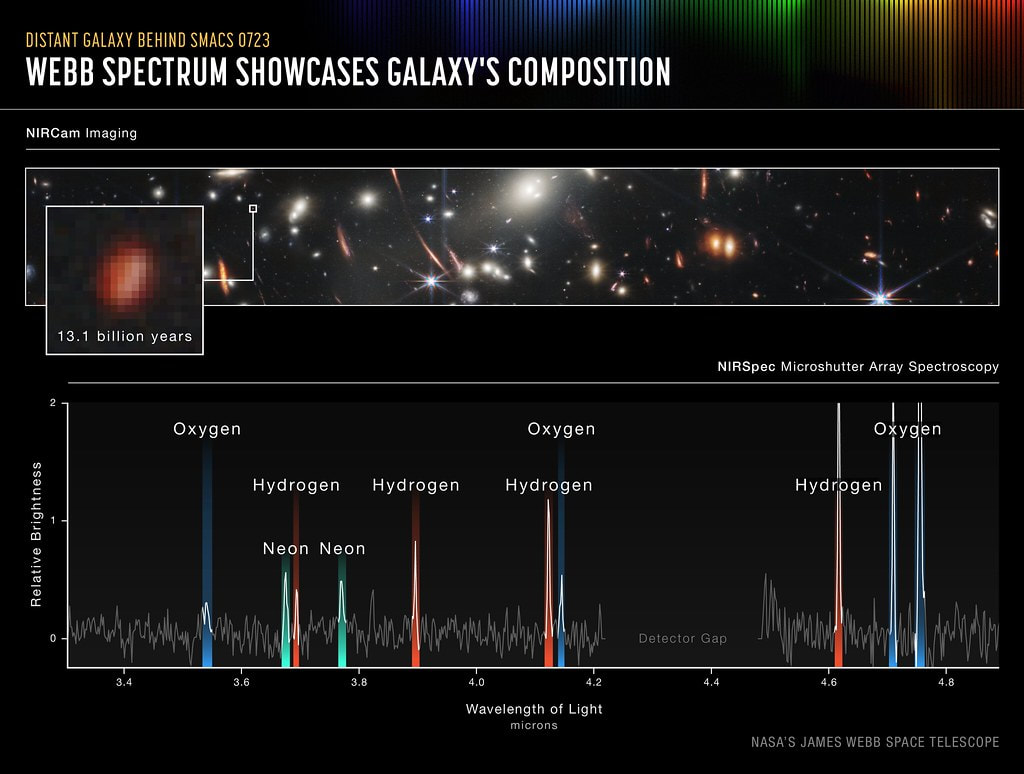

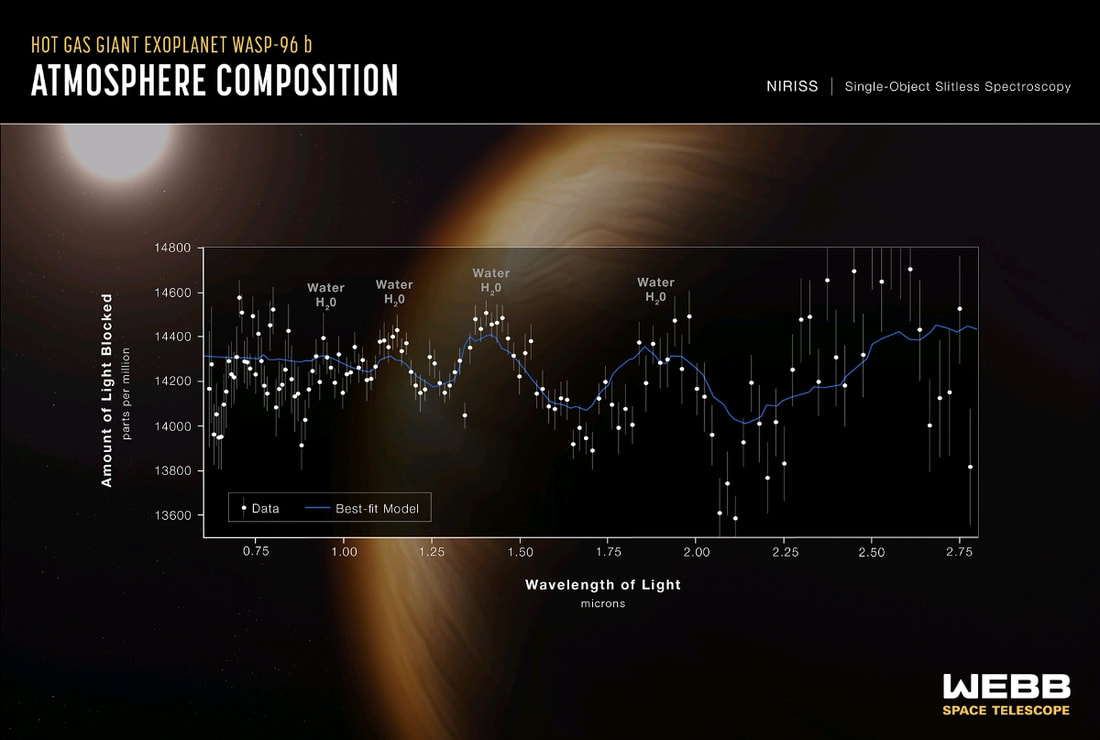

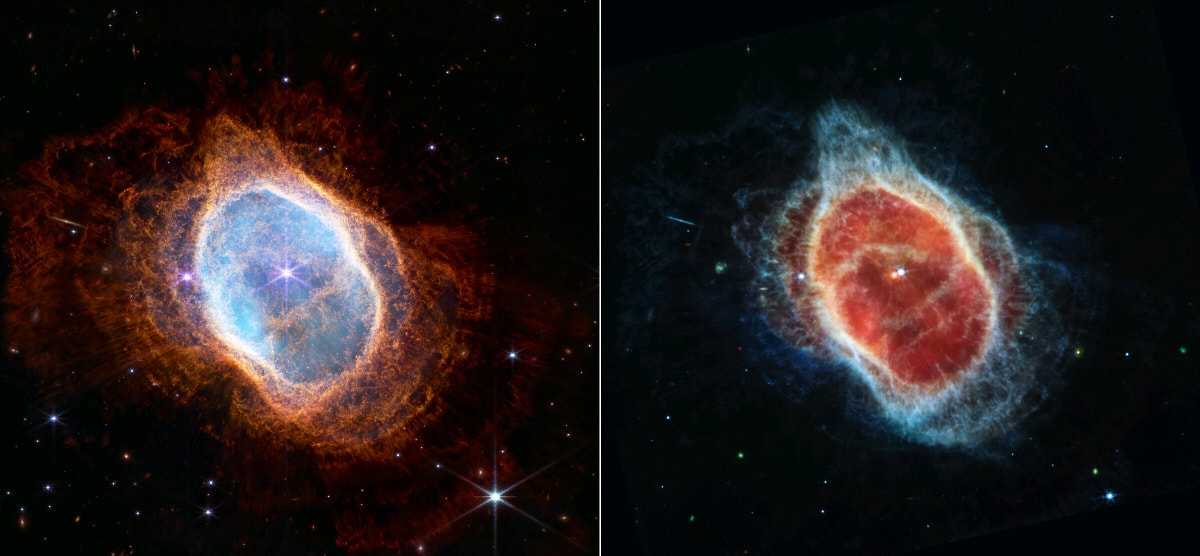

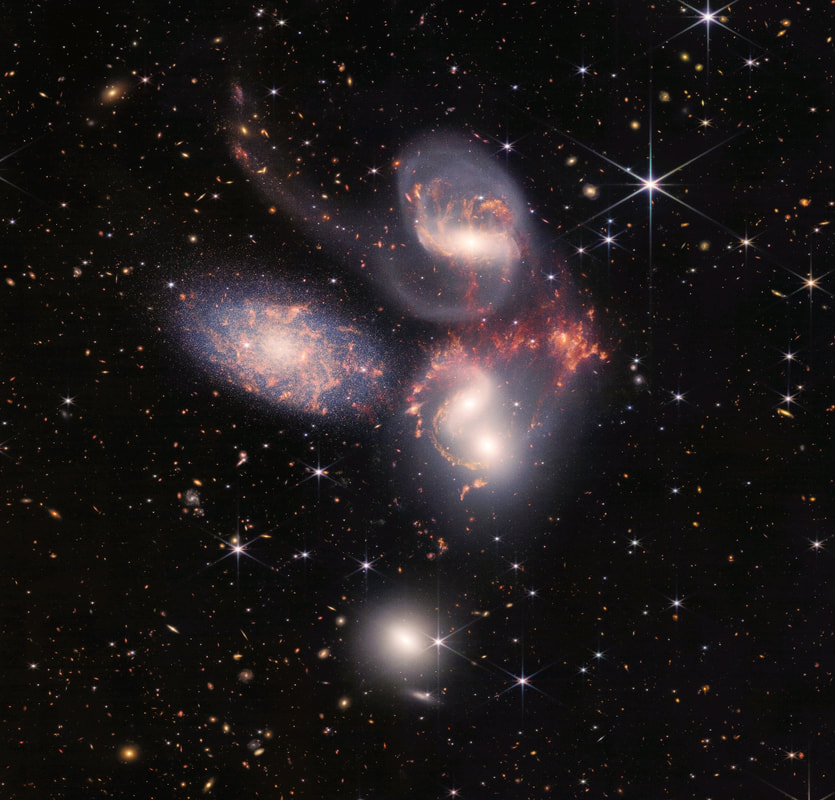

Topsham, Exeter August 2022 (1) In a recent interview by The Guardian newspaper, Dimbleby repeated this recommendation: ‘England must reduce meat intake to avoid climate breakdown, says food tsar’, The Guardian, 16 August 2022. (2) E.g., ‘New GM soya beans give 25% greater yield in global food security boost’, The Guardian, 18 August 2022. (3) https://www.bbc.co.uk/news/science-environment-62592286  JWST mirror in clean room environment. Credit: NASA Goddard. JWST mirror in clean room environment. Credit: NASA Goddard. Graham writes … After decades of development, and 6 months of launch, deployment, orbit acquisition and commissioning, the JWST is finally ready for action. It was on the 12th of July 2022 that NASA/ESA/CSA (1) released the first science results of the new space observatory, and these received significant media attention. In what follows, I will give a brief overview of the science revealed in the new images. For direct access to these and future images, and their interpretation, you can find the JWST image gallery by clicking here. One of the most striking is the first JWST deep field image, as shown below. To acquire this, the telescope is pointed at a fixed position on the celestial sphere for an extended period. The Hubble Space Telescope (HST) acquired similar deep field images, but these took multiple weeks of stable pointing to build the images. This first JWST deep field was achieved in less than a day, and this is mainly because of two factors – the light gathering area of the JWST mirror is nearly 8 times more than that of the HST, and the JWST is optimized to operate in the infra-red (IR) part of the spectrum. In previous blogs I have discussed what the IR optimization means, but it’s worth a brief recap. As you may recall, the IR part of the spectrum corresponds to heat radiation, as you can experience when sitting by a well-stoked open fire. It may appear strange to design the telescope to operate in this part of the electromagnetic (EM) spectrum, until one realises that we ‘see’ very distant objects – such as the first stars and galaxies – in this part of the spectrum. When these ancient objects formed, they generally emitted the peak of their radiation in the visible part of the EM spectrum. However, due to the expansion of the Universe, the fabric of space-time itself has significantly ‘stretched’, and by the same token so has wavelength of the emitted radiation. So all those interesting events that occurred just a few hundreds of millions of years after the creation event are most readily studied now by examining the IR part of the EM spectrum, which is at longer wavelength beyond visible red . See (2) for more detail.  Comparison of the Carina Nebula in visible light (left) and infrared (right), both images by Hubble ST. Credit: NASA/ESA/M. Livio & Hubble 20th Anniversary Team (STScI). Comparison of the Carina Nebula in visible light (left) and infrared (right), both images by Hubble ST. Credit: NASA/ESA/M. Livio & Hubble 20th Anniversary Team (STScI). The other advantage of using IR, as you may recall, is that the longer wavelength is scattered less by dust and debris, as illustrated by the accompanying images both acquired by the HST. The comparison shows the Carina Nebula in visible light (left) and infrared (right). The visible band image may be more pleasing aesthetically, but the infrared image is more revealing scientifically and, in this case, exhibits stars that weren’t visible before. Getting back to the JWST deep field image, remarkably the angular size of the image on the sky is equivalent to the angle subtended by a grain of sand at arm’s length! So, all the stars (local to the Milky Way galaxy), galaxies and other features are all contained within a very small area of the sky. One can only try to imagine how many galaxies there may be across the entire sky. HST deep field images suggested a total of about 100 billion galaxies in the visible Universe, but it will be interesting to see what an updated JWST estimate may be. The other obvious features are the apparently distorted images of galaxies, that appear as stretched curved arcs of light. These are due to a phenomenon known as Einstein Rings, although in this case the rings are obviously fragmented. These are created when light from a galaxy or a star passes by a massive object on its way to the Earth. The massive object produces a gravitational lens which bends the light. If the source, lens, and observer are all in perfect alignment, the light appears as a ring, hence the name. Clearly there are galaxies galore in this single image, many of which are billions of light years distant. One of the principal aims of the JWST project is examine the processes that led to the development and evolution of galaxies. To demonstrate the power of the JWST NIRSpec (Near Infra-Red Spectrometer) instrument, the science team identified a galaxy, the light from which has taken 13.1 billion years to reach us (recall that the Big Bang event is occurred around 13.8 billion years ago). Examining this light, the team produced the spectrum below, which features several prominent emission lines corresponding to its chemical composition. Please refer to the May 2022 blog post for more details of the JWST payload instruments. Coming closer to home, the new observatory is also able to analyse the atmospheres of exoplanets – planets outside our own Solar System – using the NIRISS instrument (Near Infra-Red Imager and Slitless Spectrometer) to attempt to detect potential signatures of life elsewhere. The target test planet is about 1,150 light-years away located in the southern-sky constellation of the Phoenix. Designated ‘WASP-96 b’, it is a large, hot planet orbiting very close to a Sun-like star (and therefore not in the circumstellar habitable zone). As can be seen in the image below, the JWST spotted the unmistakable signature of water, signs of haze and evidence of clouds. Please note that the background image of a planet is there for purposes of presentation, and is not an image of WASP-96 b. But one thing that can be said, however, is that this is the most detailed exoplanet spectrum yet acquired. Remaining within the Milky Way galaxy, at about 8,000 light years distance and in the Southern constellation of Carina (the keel), lies the Carina Nebula (NGC 3324). This remarkable object is a huge cloud of gas and dust which is effectively a stellar nursery. One of the first JWST images shows a small part of this nebula – see below. While taking a moment to appreciate the beauty and scale of the scene, it is also the case that it offers the science community the opportunity to examine the relatively ‘rapid process’ of stellar birth, which typically lasts only a few tens of thousands of years. In this image, previously hidden baby stars have been uncovered by JWST’s infra-red eye. In this type of object, the new telescope is able to reveal a significant number of such embryonic stars at different stages of their development, so allowing better understanding of the evolutionary process of stellar birth. The next image is, again, of a ‘local’ object, the Southern Ring planetary nebula (NGC 3132) which is about 2,000 light years away in the southern sky constellation of Vela (the sails). The first thing to note is that planetary nebulae have nothing to do with planets. They are stars that have cast off a glowing ring of gas, which produces a roughly circular object. In the old days this disc-like object could be confused with the image of a planet. The JWST image below shows two views, using different payload instruments. The picture on the left was acquired using the NIRCam (Near Infra-Red Camera) instrument. In this part of the EM spectrum the image is closer to that which would be acquired in the visible band, showing off the surrounding stars and detail of the ejected gas and dust cloud. The image on the right was acquired using the MIRI (Mid Infra-Red Instrument), and at these longer wavelengths the telescope can penetrate some of the obscuring gas and dust to reveal that the central object is actually a binary star. The two stars orbiting around each other are very close, one being blue and the other red. It is likely that it was the red star that shed the gaseous layers to produce the overall disc-like image. Finally, we go extra-galactic again in the last image of this initial release of JWST pictures - see below. This shows a feature called Stephan’s Quintet (NGC7318B - the brightest member), named after the astronomer who discovered it in 1877. This comprises five galaxies, four of which are gravitationally bound together. The galaxy on the left-hand side of the picture is actually much closer to us than the rest of the cluster. The four local members are about 290 million light years distant, in the northern sky constellation of Pegasus (the winged horse). The picture is a composite, built from about 1,000 JWST images in both the near and mid infra-red parts of the spectrum. The angular size of the image is about 1/5 the diameter of the moon (approximately 6 arc minutes) and also features foreground stars (local our own Milky Way galaxy) distinguished by the 8-spiked diffraction pattern. Remarkably, all the other objects in the image are very distant galaxies. I haven’t tried to count them but they must number in the hundreds. I hope you have enjoyed this brief tour through the first JWST science images. It has certainly been worth waiting out the many years needed to bring the JWST project to fruition. Speaking as a scientist, I think they are awesome, and no doubt herald the beginning of yet another historic chapter in the annals of astrophysics and cosmology. There is a whole lot more to come in this story! As a Christian believer, the images bring into sharp focus the words of Psalm 19, verse 1: ‘The heavens declare the glory of God; the skies proclaim the works of his hands’(3). In my Christian journey, my first step, inspired by the science, was that I had become more comfortable with the idea of a creator God as the engineer of the observed fine-tuning of the cosmos (see (4) for more detail). By the same token, each time I experience the brilliance and warmth of the Sun on a beautiful summer’s day, that ‘gravitationally-bound fusion reactor’ in the sky brings home the notion of God’s creativity and power. In the same way, these new data from the JWST are an amazing reminder of God’s power and majesty. Wow!

Graham Swinerd Southampton July 2022

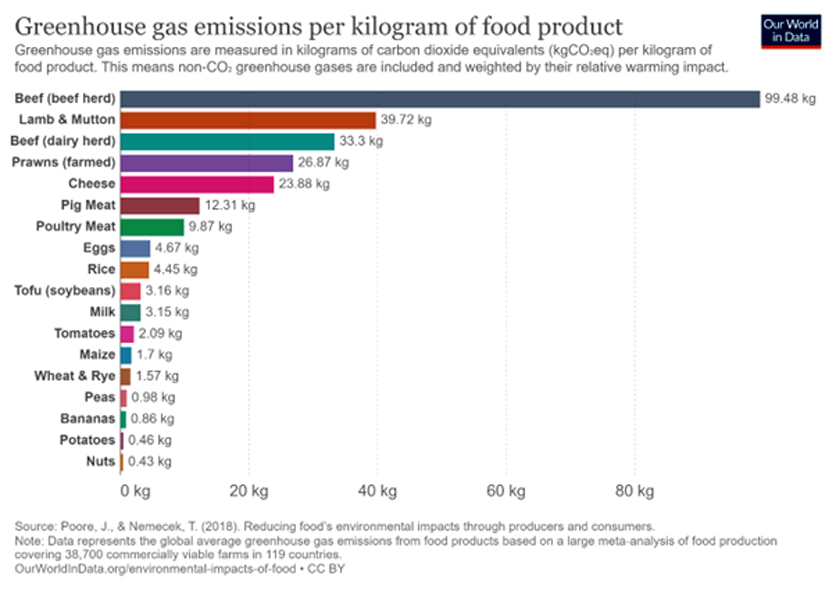

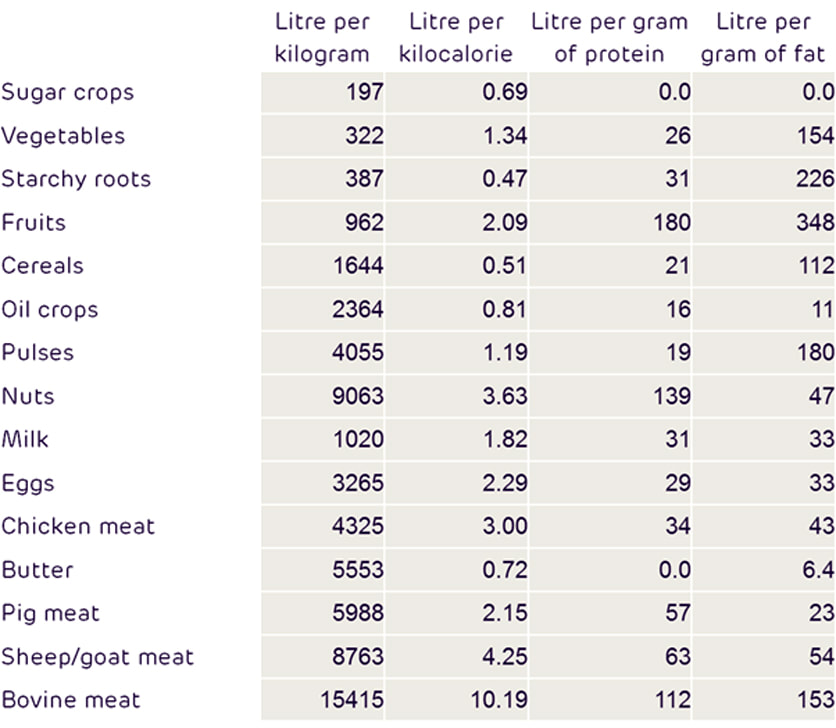

Credit: John Bryant Credit: John Bryant John writes … Plants and our planet. My PhD supervisor, Dr (later Professor) Tom ap Rees often quoted the phrase which I have used as the title of this blog. It is actually part of a verse from the Bible: 1 Peter, chapter 1, v 24: All flesh is grass and all its glory like the flowers of the field; the grass withers and the flowers fall … . The verse refers to the transience of human life and my supervisor had doubtless heard his father, a church minister, quote it at various times. However, Tom ap Rees’s use of it in our lab conversations took us in a very different direction. He was referring to our total dependence on plants, which was one of his major motivations for doing research on how plant cells control their metabolism and which has driven my research on plant genes and DNA.  Jubilee Oak Tree. Credit: John Bryant. Jubilee Oak Tree. Credit: John Bryant. Let us look at this a little more closely. I am very fond of saying that without plants, we would not be here. This may seem rather sensationalist but it is true. It is a statement of the dependence of all animals (and indeed of many other types of organism) on green plants for their very existence. We see hints of a partial understanding of this in the media as they debate ways of mitigating climate change. Planting trees is correctly lauded as a means of capturing carbon dioxide from the atmosphere while at the same time releasing oxygen. Further, these two features have featured strongly in the evolution of life on Earth, as we describe in Chapter 5 of the book: the first occurrence of photosynthesis in simple micro-organisms (similar to modern blue-green bacteria) about 2.8 billion years ago and the invasion of dry land by green plants about 472 million years ago had major effects which have led to the development of the biosphere as we know it today. However, what is often not emphasised enough in our discussions is that the process which takes in carbon dioxide and gives out oxygen, namely photosynthesis, uses energy from sunlight to drive the synthesis of simple sugars. This process requires light-absorbing pigments, mainly the chlorophylls which are green because they absorb light in the red and blue regions of the spectrum. The mixture of light wavelengths which are not absorbed gives us green. Green plants are thus our planet’s primary producers. This ability of plants to ‘feed themselves’ (autotrophy) is essential for the life of all non-autotrophic organisms, namely animals of all kinds, fungi and some micro-organisms. Non-autotrophic organisms such as ourselves are thus directly or indirectly dependent on green plants for their nutrition. We eat plants or we eat organisms that themselves eat plants. Thus, I repeat ‘Without green plants we would not be here’. ‘All flesh is grass’.  Credit: John Bryant Credit: John Bryant Plants and population. I now want to look at this in the context of feeding a hungry world at a time of changing climate (1). Current estimates from charities such as TEAR Fund, Christian Aid and OXFAM indicate that about 800 million people are undernourished. In 2020, 9 million people actually died from malnutrition. This compares to the total number of deaths from malaria, HIV, TB and flu combined, of 3.17 million in the same year. Poverty is undoubtedly one of the main drivers of this situation: food needs to be more readily and cheaply available but food production also needs to be increased. Further, current projections suggest that the global population will increase to about 9 billion by 2050, an increase of about 1.05 billion on the current figure (2), with the vast majority of the increase occurring in low and middle-income countries (LMICs). At the same time, agricultural land is being lost to the effects of climate change and there is also some loss in order to house the increasing population. The situation has been described as a perfect storm. I am going to focus specifically on the tension between two imperatives that I described in the previous paragraph, namely care for planet Earth and care for its human inhabitants. What are the environmental ‘costs’ we incur as we produce enough food for a population which is already fast approaching 8 billion and which is set to reach 9 billion within 30 years? The table below sets out one of those costs, namely the production of greenhouse gases. It is immediately and obviously apparent that farming of animals for food is far more ‘expensive’ than farming of crops. For example, beef production produces more than 50 times the quantity of greenhouse gases per kilogram of food than does wheat production. We need to note in passing that this way of expressing the data (‘per kilogram of food product’) places milk (whether from cows, sheep or goats) in an anomalously low position on the chart because 1 kg of milk is largely water. We get a better idea of the costs of producing the main nutrients in milk – protein and lipid - by looking at cheese production. I now want to look at two other aspects of the costs of food production, land use and water use. These must both come into our consideration as we attempt to balance the tensions involved in ‘feeding the nine billion’. Firstly, about 75% of the world’s agricultural land is used for animal production. This figure includes land that is used to grow crops for animal feed. Crops for direct human consumption occupy the remaining 25%. However, the yield of food obtained from just one quarter of the total agricultural land exceeds that obtained from the three quarters devoted to farming animals. Indeed, depending on the particular animal under consideration, the average protein yield per hectare of crop land is six times that of land devoted to animal husbandry. Secondly, there is water usage which, like land use, shows a huge difference between plant- and animal-based food production, as is shown in the following table, taken from Mekonnen and Hoekstra (2010). Overall then, the data from agricultural science and biogeography indicate that crop growth is ‘better’ for the planet than animal farming and that concentrating more on crops than on animals is likely to be the better route to feeding the burgeoning population. However, I am not forgetting that some land is better suited to animals than to crops, as discussed by Isabella Tree in her book Wilding (3). Nor am I trying to dictate that we should all move to a plant-based diet: in the rich industrialised nations of the world, we are privileged that this is a matter of personal choice. Nevertheless, it is clear that there must be extensive focus on plant science - genetics, molecular biology, biochemistry and physiology - over the next 20 years, both in respect of mitigating climate change and in respect of global food production. That science will be the subject of a future blog.

John Bryant Topsham, Devon June 2022

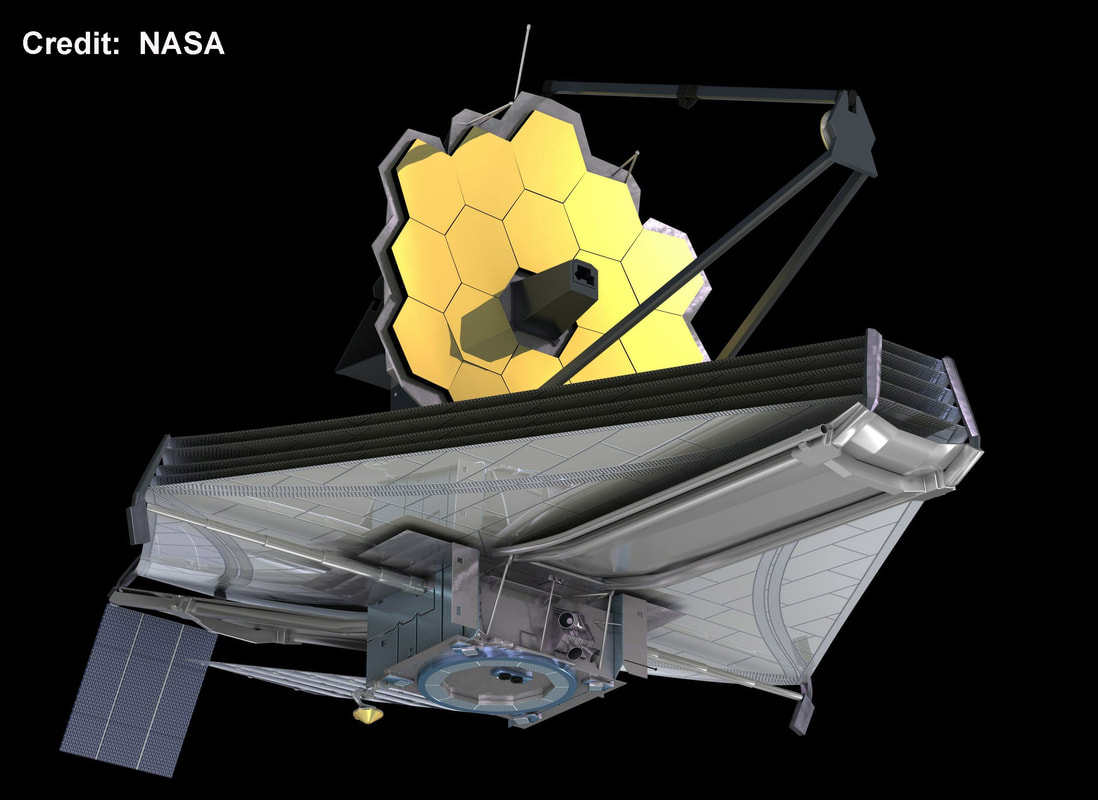

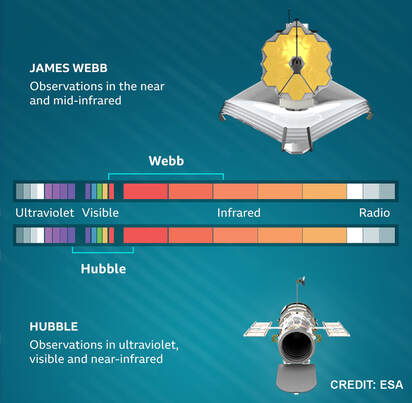

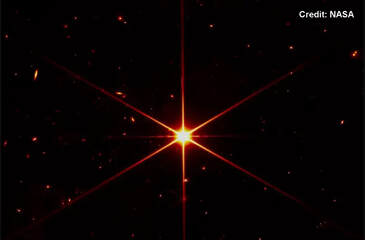

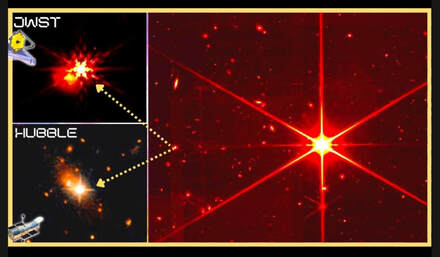

Graham writes ... After 25 years in development, and a 10 billion dollar spend, the JWST was finally launched on Christmas Day 2021 (much to the delight, I’m sure, of the families of the army of engineers who had to attend the launch!) as I described in my January 2022 blog post. You might like to have a look back at that post, which discussed the launch and looked forward to the spacecraft deployment and orbit insertion.  James Webb Space Telescope operational configuration. Credit: NASA James Webb Space Telescope operational configuration. Credit: NASA A second brief post in January 2022, announced the news of the completed configuration deployment just 17 days after launch, while the spacecraft was still on its way to its operational orbit around the second Lagrange point. The deployment sequence required the successful actuation of over one hundred mechanisms which is unprecedented in the brief history of the space age. In the context of spacecraft engineering, ‘mechanisms’ are essentially moving parts which allow the deployment of things like solar arrays and antennae, which usually need to be stowed in a compact configuration for launch. I remember a time, in the 1980s, and to some degree the 1990s, when it was considered good practise to minimise the number of mechanisms, as each one represented a potential single-point failure that could terminate the mission of a very expensive spacecraft. I think it’s fair to say that the complex deployment sequence of the JWST has finally put that philosophy of ‘mechanism-phobia’ to bed. If you haven’t seen this remarkable sequence there is a link to an excellent video in my first January 2022 post.  Spacecraft characteristics: the JWST versus the HST. Spacecraft characteristics: the JWST versus the HST. After the deployment, the spacecraft continued its journey outwards towards its final operational orbit, which it reached about 30 days after launch. Three mid-course corrections (thruster firings) placed it into a large, sweeping halo orbit around the L2 point about 1.5 million km from Earth. L2 is an equilibrium point in the Sun-Earth-spacecraft three-body system (see January post and (1) for details). However, it is a point of unstable equilibrium, which means that the spacecraft will have to continue to execute course corrections during its operational lifetime to keep it on-station around L2.  Spectral bands: The JWST versus the HST. Spectral bands: The JWST versus the HST. The main features of the spacecraft configuration are a 6.5 m (21 feet) primary mirror, consisting of 18 hexagonal, gold coated elements and a tennis court-sized sun shield. The latter is required to passively cool the payload instruments to a temperature of around -230 degrees Celsius, as the telescope is optimised to operate in the infra-red (heat radiation) part of the spectrum – see diagrams. The temperature had already decreased to about -200 degrees C by early January. Since the instruments must be kept on the dark side of the observatory, the telescope can access only 40% of the sky on any specific day of the year, and it will take about 6 months to access the whole sky.  Prealignment image of a single star appears as 18 different stars. Prealignment image of a single star appears as 18 different stars. Each hexagonal element of the mirror has 7 actuators (mechanisms again!) to tilt, translate, rotate and deform each mirror surface to ensure they all operate as one ‘perfect’ parabolic surface. Prior to the alignment process, the telescope imaged a single bright star in the constellation of Ursa Major (aka ‘The Plough’ in the UK) called HD84406. The resulting image shows the star in 18 different positions. To undertake the alignment process of the individual elements, a test star in the constellation of Draco (the Dragon) called 2MASS J17554042+6551277 (2) was chosen because it is effectively an easily identifiable ‘lonely star’ with few nearby neighbouring stars. Using this star, each hexagonal element was adjusted so that the 18 separate images were amalgamated into a single point at the telescope’s focus to an accuracy of around 50 nm (1 nm = 1 nanometre = 1 thousand-millionth of a metre). This was a landmark process in the commissioning of the telescope, which was completed successfully in early May 2022.  The Test Star in the constellation of Draco used to align the elements of the primary mirror. The Test Star in the constellation of Draco used to align the elements of the primary mirror. As I write, the process of collimating each of the observatory’s instruments is underway. The spacecraft has a number of primary instruments (spacecraft engineers love their acronyms!):

The test image revealed new discoveries. The test image revealed new discoveries. Returning to the picture of the test star in Draco, which was acquired using the NIRCam instrument, the alignment team noticed a scattering of galaxies in the background of the image, many of which were estimated to be billions of light years distant. One scientific bonus derived from this commissioning activity is the image of a galaxy which appears to be in the process of ejecting its once-central super-massive black hole (see picture). As described in (3), such super-massive back holes (with masses typically of the order of millions of solar masses) are ubiquitous at the centres of galaxies throughout the cosmos. About 8 billion light-years distant, the galaxy 3C186 is home to an extremely bright galactic nucleus — the signature of an active super-massive black hole. But this one is about 35,000 light-years from the centre of its home galaxy, suggesting it is in the process of being ejected. This object had already been imaged by the Hubble Space Telescope, and this new data from the JWST has reignited debate about what may be causing the ejection of this extremely massive object. So, when can we expect the first operational images from the new observatory? Best guess at the moment is probably late June or early July. And what can the JWST do in furthering our understanding of the Universe? The answer to this question is related to the characteristic that the observatory is optimised to observe in the infra-red part of the spectrum, as we mentioned earlier. This is effectively heat radiation, which is why the telescope and the payload instruments need to be cooled to very low temperatures. If the JWST operated at the same temperature as the Hubble Space Telescope – around room temperature – the telescope and instruments themselves would produce thermal radiation which would swamp the incoming data from the sky.  This image gives a good impression of the size of the main mirror. This image gives a good impression of the size of the main mirror. So why is infra-red good? First off, it is less prone to scattering from dust and debris (compared to visible light) so the JWST will be able to observe ‘local’ events such as the formation of stars and the development of embryonic planetary systems beyond our own. The infra-red optimisation will also enable the observation of very distant objects, such as the first stars and galaxies. When these were forming, around 13 billion years ago, the light that they emitted in the visible part of the spectrum will have been stretched in wavelength by the subsequent expansion of the fabric of space-time. The JWST will now ‘see’ this radiation in the near and mid infra-red parts of the spectrum. Also, as mentioned above, one of the payload elements has been enabled to observe exoplanets to look for signatures of habitability in their atmospheres – such as water vapour and oxygen. The JWST is part of a long heritage of astronomical instruments designed to answer the deep questions about the cosmos in which we live, such as

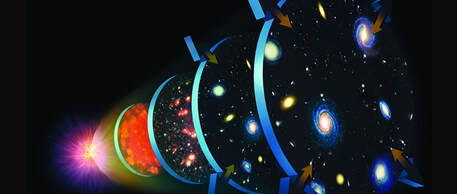

Clearly big questions, and of course no one project such as the JWST can be expected to provide all the answers. Clearly, the quest for understanding is an incremental process. However, we have great expectations that, like its predecessors, the JWST will reveal new findings beyond our hopes and imagination. However, it is also important to realise that all observatories that utilise the electromagnetic spectrum to probe the cosmos are ultimately limited by a fundamental barrier. As described in the book (4), immediately after the Big Bang, and for some 380,000 years afterwards, the energy and matter content of the Universe comprised a dense and very hot soup of charged particles and photons. Because the photons (particles of light) were continually interacting with the particles, the radiation was unable to propagate freely so the Universe was effectively opaque. This state of affairs continued until the temperature of the Universe was low enough for the charged particles to form neutral atoms, at which point the Universe became transparent to light. Consequently, direct observation of the events of the Big Bang using the electromagnetic spectrum are veiled by this early era of universal fog.  Time slices of an evolving Universe, as we understand it. (c) Science Photo Library. Time slices of an evolving Universe, as we understand it. (c) Science Photo Library. Rather than finish on this rather negative note, it should also be pointed out that we have other avenues of investigation to discover the secrets of the Big Bang, the main one currently being the emulation of the physical conditions just a fraction of a second after the Bang using particle accelerators. Another pathway is the utilisation of the new technology of gravitational wave detectors. The era of universal fog presents no barrier to the propagation of gravitational waves, and consequently the cosmos is probably awash with gravitational radiation containing information about the genesis event. However, the science of gravitational wave detectors is very much in its infancy, and nobody quite knows where it’s going and what a gravitational wave observatory of the future might look like. That is a revolution that has yet to unfold. At the end of the day, after all the talk of science and engineering, our amazing God has given us a rational and creative intellect to envisage and build incredible machines like the James Webb Space Telescope (and the Large Hadron Collider, comes to that). The motivation that drives us to these heights of ingenuity and creativity comes from a God-given curiosity to uncover the secrets of His beautiful creation! I hope to be showing some JWST images of this in the July blog post. Graham Swinerd Southampton May 2022

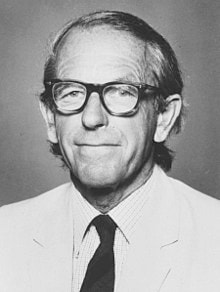

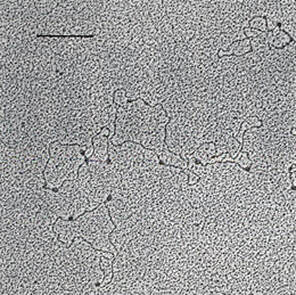

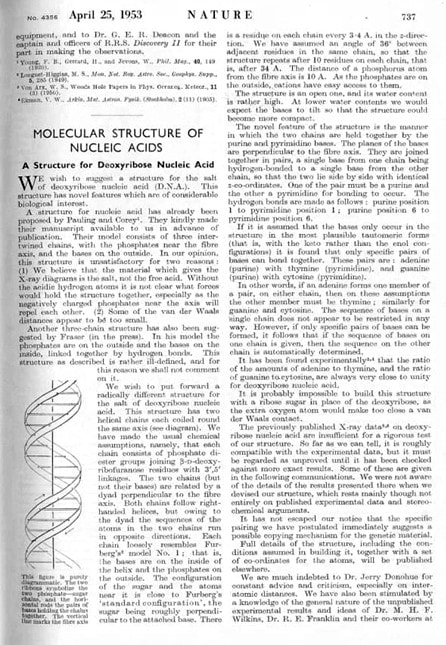

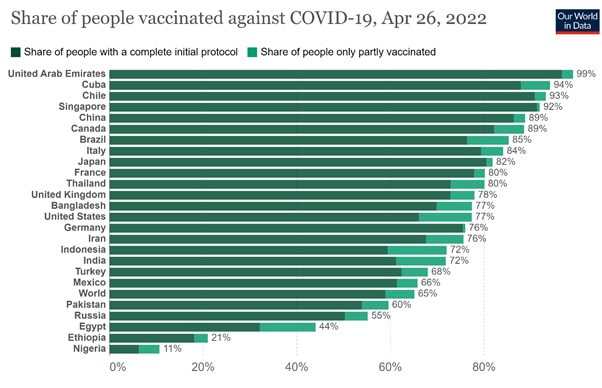

John writes … I started to write this blog post on April 25th which is a very significant date in the history of molecular biology. On April 25th 1953, the prestigious journal Nature published the paper that announced the elucidation of the double helical structure of DNA. ‘Landmark’ is a rather over-used term but this was certainly one. Like any major discovery it led to a flurry of further experimental work. In this case, the structure gave some clear clues about the biochemical mechanisms involved in replication of DNA and in the working of genes. Understanding the biochemistry then led to research on the way in which different living organisms control that biochemistry throughout the different phases of their life, research which continues to this day.  Fred Sanger, University of Cambridge, inventor of the ‘chain-termination’ DNA sequencing technique. (Credit: public domain) Fred Sanger, University of Cambridge, inventor of the ‘chain-termination’ DNA sequencing technique. (Credit: public domain) In our February vlog I talked about the Golden Age of Genetics; the discovery of DNA structure and the subsequent biochemical research were part of the prelude to that golden age, as was the decoding of the genetic code in the early 1960s. But what actually initiated the golden age was the invention of genetic modification (GM) techniques – genetic engineering (GE) – in the early 1970s, an invention that could not have happened without the biochemical and genetic research of the previous decade. Genetic modification techniques enabled scientists to study individual genes. Combination of biochemical knowledge with possibilities raised by GE techniques led to the development of methods for sequencing DNA. The first full DNA sequence, that of a bacteriophage (a virus that infects bacteria) and consisting of 5365 base pairs (DNA ‘building blocks’) was published in 1977. The golden age was underway.  Electron micrograph showing a protein binding to structural features in small circular DNA molecules that were constructed using GE techniques (see first link in reference ( 2)). Picture © Sara Burton, Jack Van’t Hof, John Bryant. Electron micrograph showing a protein binding to structural features in small circular DNA molecules that were constructed using GE techniques (see first link in reference ( 2)). Picture © Sara Burton, Jack Van’t Hof, John Bryant. The developments that I have just described took place early in my career and without a doubt have enabled me and my colleagues to carry out research that previously would not have been possible. Some of our work certainly employed more conventional biochemical techniques (1) but a significant proportion was only possible because we were able to use GE and related methods in our studies of regulatory mechanisms (2). I feel immensely privileged to have contributed in a minor way to the golden age and have also been thrilled in making discoveries that give me insights into the awesome beauty and cleverness of the regulatory mechanisms that operate in living cells The progress that has been made in our understanding of how genes work has certainly been amazing. Thinking back to the start of my career, I would not even have imagined the possibility of knowing some of the things that are now embedded in our understanding of molecular biology. That leads to another of my ‘pet themes’. I am all for original research with ‘no strings attached’ because I believe that is a good thing to know and understand more about the universe in which we have been placed. Further, for me as a Christian, it gives more insight in the work of our creator God. However, I also want to see the findings of science used, where possible, for the good of humankind and indeed of the whole planet. In our February vlog I mentioned several medical applications of our current understanding of DNA and genetics. One example is the development of vaccines for COVID-19 which depends on knowledge of virus genes and, for the Astra-Zeneca vaccine, also on GE techniques. Other examples included rapid genome sequencing to identify gene-based diseases and the use of genome editing (which is in effect another type of GE) to make pig organs suitable for transplant into humans. These are undoubtedly sophisticated applications of our knowledge but they raise questions about world-wide availability, questions about which I have serious concerns. Taking the first example, the development of vaccines, in the UK many people have had three doses of COVID-19 vaccine while some have had a fourth. And yet in many of the poorer countries of the world, fewer than 20% of the population have received any vaccine and even if they been vaccinated, they have had only one dose. In the chart, this situation is illustrated by data from Nigeria and Ethiopia. I do not have the space here to comment much further on this, except to say that it seems very wrong and surely there must be some way of addressing such glaring inequality. The other topic mentioned briefly above, on which I want to comment further, is genome sequencing. It is undeniably useful in diagnosis of genetic diseases and in prediction of some possible future health-care needs. However, as with vaccine availability, this is medical technology of the rich industrialised nations of the world and we should not let it, wonderful though it is, blind our eyes to the still widespread occurrence of malaria, TB, HIV, childhood dysentery and so on. These diseases account for many more deaths than can be attributed directly to genetic mutations. Further, even in developed countries it is possible that the wonders of genetic medicine may lead us to take our eyes off the ball with respect other factors that affect our health. An anonymous GP said that for most people, the postal code of where they live gives a better picture of their health than their genome sequence. What did she/he mean? Poverty, poor housing, poor social and physical environments, less access to facilities and so on can all have dramatic effects on our health. In and around all our major cities in the UK, there are huge differences in life expectancy between people living in the most affluent areas and people living in the poorest areas; for example in Glasgow in 2021, the difference between the two extremes was nearly 20%. Amongst the significant factors contributing to these inequalities are differences in air quality. A recent article in New Scientist (3) looked at the effect of environmental pollution on health, including resistance to and recovery from diseases, reaching the conclusion that ‘For most diseases, exposure to pollution plays a far greater part in mortality than genetics.’ So, although I am thrilled to be living in the ‘Golden Age of Genetics’ I am also motivated to apply the imperative to love our neighbour as ourselves in dealing with inequalities, whether internationally or within our own country so that all may benefit from advances in biomedical science. John Bryant Topsham, Devon April 2022 (1) Bryant, Fitchett, Hughes, Sibson, doi.org/10.1093/JXB%2F43.1.31

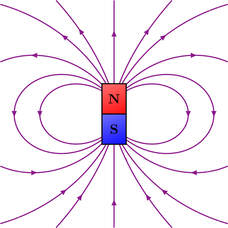

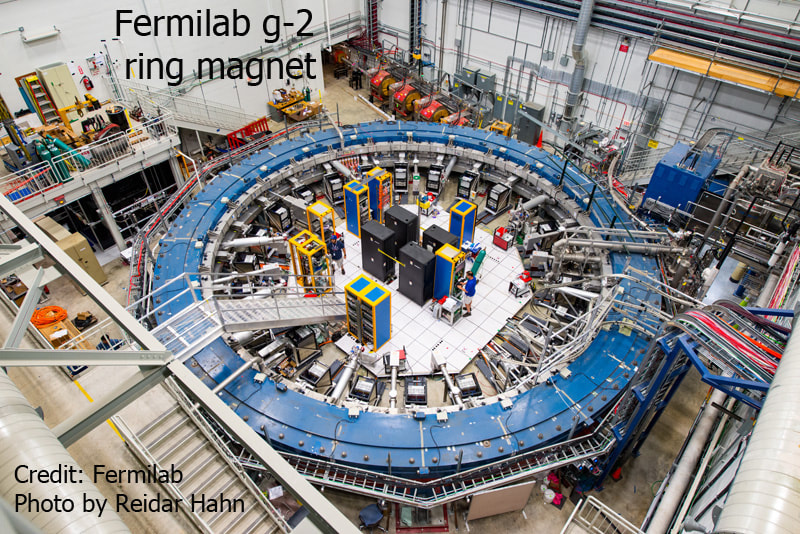

(2) Burton, Van’t Hof, Bryant, doi.org/10.1046/j.1365-313X.1997.12020357.x ; Dambrauskas et al., doi.org/10.1093/jxb/erg079 (3) Lawton, New Scientist, 29 Jan 2022, pp 44-47. Graham writes … Welcome back to this topic, which we discussed back in October’s blog post. As always in physics and biology, significant developments always happen, which have distracted me from writing further about the prospect of new physics. It might be worth revisiting the October 2021 post to remind yourself briefly of some of the fundamentals that are relevant to today’s discussion.  As discussed then, one of the main theoretical pillars of the very small – the world of atoms and subatomic particles – is the standard model of particle physics. On the one hand, we know that the theory is ‘right’ in a very fundamental way, as we can perform detailed calculations to predict the results of experiments very accurately. This is evident from what we shall see later in this post. However, on the other hand, we also know that the standard model is not comprehensive. Again, as discussed in October, the theory of gravity (Einstein’s general theory of relativity) persistently ‘refuses’ to be unified with the standard model. Also, the mysterious ‘dark universe’ – dark matter and dark energy – which is believed to comprise about 95% of the matter/energy in the Universe is missing from the model. So, we know there’s lots to be done, but we really are not sure how to go about it. All we can do is attempt to find experimental results which are not in complete accord with the theory, and then probe what such an anomaly might mean for the model. The reason why I’m blogging today is to talk about a ‘strange’ experimental result, which has been reported recently by a team of scientists working at Fermilab near Chicago, Illinois. Given that this can be referred to as the ‘anomalous magnetic dipole moment of the muon’, or the ‘muon g-2 experiment’, we need to unpack some of the terminology to hopefully reveal something of what’s going on. So, where to start? Firstly, I’d like to look at a property of fundamental particles called spin. I think we are fairly familiar with the notion of spinning objects. If we spin up a macroscopic object, like a wheel, it acquires something called angular momentum, and the amount of angular momentum depends upon the mass of the object, how the mass is distributed and how fast it is rotating. It is the rotational equivalent of linear momentum. So, in the same way as we avoid standing in front of objects with large amounts of linear momentum, like a car travelling at speed, we also know intuitively not to tangle with an object with a significant amount of angular momentum, such as a large, rapidly rotating wheel. If we reached out and tried to grasp the wheel to slow it down, its significant rotational inertia will cause us grief.  Magnetic dipole field. Magnetic dipole field. However, scientists also talk about spin when referring to sub-atomic particles such as an electron, for example. However, the properties of the ‘spin’ of an electron are very different to that of a macroscopic body. For one thing the angular momentum is quantised in terms of magnitude and direction. Also, such particles are considered to be infinitesimally small point particles, and as such talking about physical rotation makes no sense. But intriguingly they do possess intrinsic attributes that are usually associated with the property of rotation. Such particles also possess a magnetic field very similar to that produced by a tiny, simple bar magnet. This type of field is referred to as a magnetic dipole field, with the usual so-called north and south poles. You may have seen the structure of this field in a simple school experiment by sprinkling iron filings onto a piece of paper which has been placed on a bar magnet. This type of magnetic field can be generated by the motion of an electric charge around a looped wire. So, we could imagine that electron is rotating, carrying its electric charge in a circular path around its axis of rotation, producing the magnetic field. However, we know that this cannot be so. If we were to envisage the electron as something other than a point particle, and try to use size estimates, its surface would have to be rotating faster than the speed of light to produce the measured dipole field strength! It’s clear that particle spin is a difficult concept for everyone (not just me!) to appreciate intuitively. So, after all that, what is the dipole moment? Well, if we place the electron in an external magnetic field, the north-south axis of the particle’s field will align with the direction of the external field, as a compass needle rotates to align with the Earth’s magnetic field to indicate north. Hence the electron’s field exercises a torque, or moment, on the particles magnetic axis to bring this alignment, and this is referred to as the dipole moment.  Another attribute of the particle which has its counterpart in macroscopic rotation is precession. If you think of a toy gyro and spin it up, then the force of gravity produces a torque which causes the axis of rotation to precess (or ‘wobble’), as illustrated in (the first half of) a video demonstration, which can be seen by clicking here. In a similar way, the magnetic axis of a particle (indicated by the the black arrow in the diagram) will also precess if placed in an external, uniform magnetic field (indicated by the green arrow). This is referred to as Larmor precession, after Joseph Larmor (1857-1942). I don’t know if you are still with me, but let’s come back to the media assertions about new physics, and the Fermilab announcement which mentions something called the ‘g-2 experiment’. The g here is referred to as the ‘g-factor’, which is a dimensionless quantity (a pure number) related to the strength of a particle’s dipole moment and its rate of Larmor precession. For an isolated electron, g = 2 according to Paul Dirac’s theory of relativistic quantum mechanics (QM), which he published in 1928. Subsequently, this theory has been superseded by the development of quantum electrodynamics (QED), which describes how light and matter interact. Using QED, we can perform the calculation for an electron that is not ‘isolated’. The g-factor calculation is not just about the interaction of the electron with the applied external magnetic field, but it is also influenced by interactions with other particles. This introduces another piece of quantum world terminology called quantum foam. This is the quantum fluctuation of the spacetime ‘vacuum’ on very small scales. Matter and antimatter particles are constantly popping into and out of existence so that the quantum vacuum is not a vacuum at all. For those of you with a bit of QM background, this is due to the time/energy version of the uncertainty principle. This idea was originally proposed by John Wheeler in 1955. Consequently, the electron also interacts with the quantum foam particles, and the influence of these short-lived particles affect the value of the g-factor, by causing the particle’s precession to speed up or slow down very slightly. The experimental value of the electron g factor has been determined as g = 2.002 319 304 362 56(35) where the part in brackets is the error. The theoretical value of g-2 matches the experimental value to 10 significant figures, making it the most accurate prediction in all of science.  The muon is 207 times more massive than the electron. The muon is 207 times more massive than the electron. So, we find that the behaviour of an electron conforms with theory very well, but what about other particles? The recent Fermilab experiment refers to similar work looking at a particle called a muon. So, what is this? The muon is a particle which has the same properties as an electron, in terms of charge and spin, but is 207 time more massive (see the blog post for October again, for details). It is also unstable, with a typical lifetime of about 2 microseconds (2 millionth of a second), but this is still long enough for the scientists to work with them. What the Fermilab team did was measure the muon’s g-factor in the same way that we have described for the electron. This time a difference between experiment and theory was observed, g(theory) = 2.002 331 83620(86), g(experiment) = 2.002 331 84121(82). Of course, the difference is tiny, so why the big deal? Well, the theoretical value describing how the quantum foam influences the value has been determined using our current model of the subatomic world – that is, the particles and forces as described by the standard model of particle physics. The fact that the experimental value differs suggests that there may be new particles and/or forces that are currently absent from our understanding of the quantum world. Another question posed by this result is why does the electron conform and the muon not? In the theory, the degree to which particles are influenced by the quantum foam is proportional to the square of their mass. Since the muon is about 200 times heavier than the electron, it is approximately 40,000 times more sensitive to the effects of these spacetime fluctuations.  At the end of the day, do the Fermilab results have the status of a confirmed, water-tight discovery? Well, actually no. The degree of certainty at present is at the 4.2 sigma level, which suggests that there is a roughly one chance in 100,000 that the result could be a result of random chance. To get the champagne glasses out, and to start handing out Nobel prizes, further work is required to achieve a 5 sigma result (again see October’s post). So, where does all this take us? The bottom line is that it tells us there are things about the quantum world that we don’t know – which, you could say, we knew already! But this is how physics works. To paraphrase Matt O’Dowd, Australian astrophysicist, “ … to find the way forward, we need to find loose threads in the [current] theories that might lead to deeper layers of physics. The g-2 experiment is just one loose thread that was begging to be tugged. The scientists at Fermilab have just tugged it hard …”. I await further developments! Graham Swinerd Southampton March 2022 |

AuthorsJohn Bryant and Graham Swinerd comment on biology, physics and faith. Archives

March 2024

Categories |

RSS Feed

RSS Feed